CaPhy: Capturing Physical Properties for Animatable Human Avatars

ICCV 2023

Zhaoqi Su1, Liangxiao Hu2, Siyou Lin1, Hongwen Zhang1, Shengping Zhang2, Justus Thies3, Yebin Liu1

1Tsinghua University, Beijing, China

2Harbin Institute of Technology, Weihai, Shandong, China

3Max Planck Institute for Intelligent Systems, Tübingen, Germany

Abstract

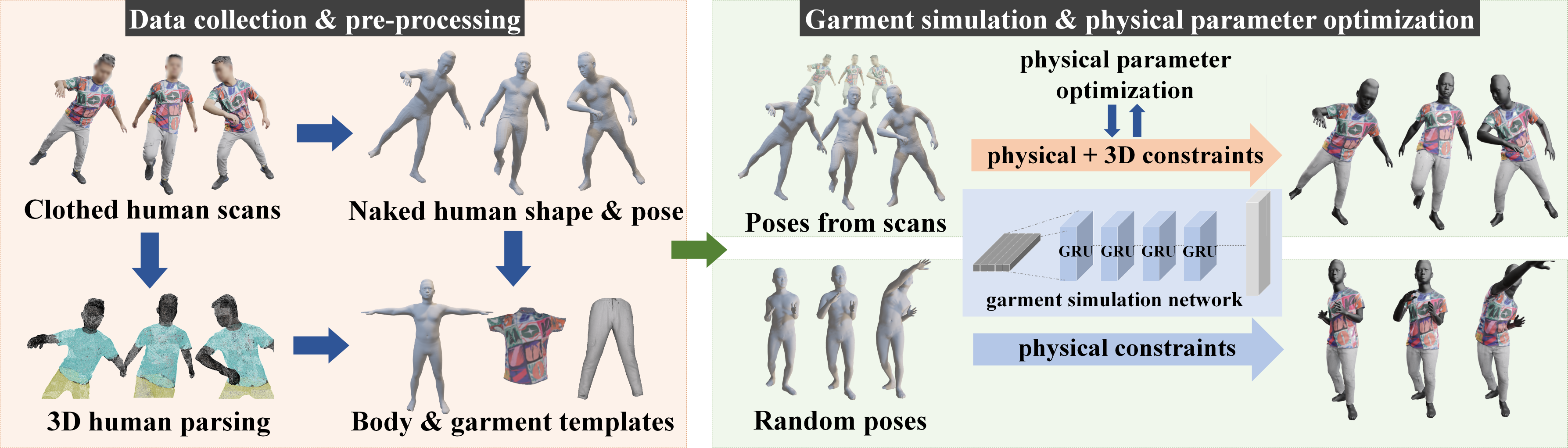

We present CaPhy, a novel method for reconstructing animatable human avatars with realistic dynamic properties for clothing. Specifically, we aim for capturing the geometric and physical properties of the clothing from real observations. This allows us to apply novel poses to the human avatar with physically correct deformations and wrinkles of the clothing. To this end, we combine unsupervised training with physics-based losses and 3D-supervised training using scanned data to reconstruct a dynamic model of clothing that is physically realistic and conforms to the human scans. We also optimize the physical parameters of the underlying physical model from the scans by introducing gradient constraints of the physics-based losses. In contrast to previous work on 3D avatar reconstruction, our method is able to generalize to novel poses with realistic dynamic cloth deformations. Experiments on several subjects demonstrate that our method can estimate the physical properties of the garments, resulting in superior quantitative and qualitative results compared with previous methods.

Method

Results